Shanhai Toolchain

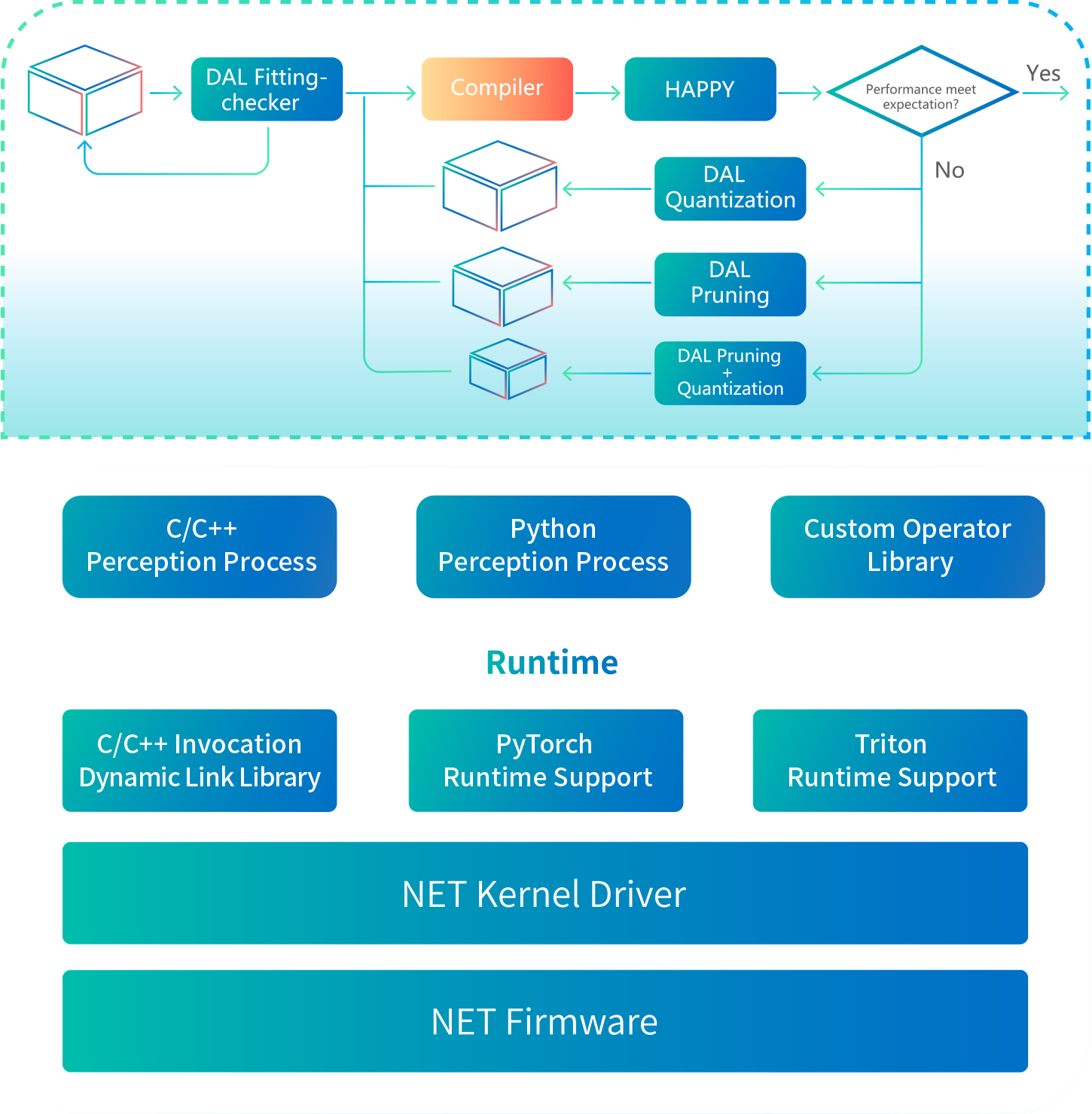

We launched our Shanhai Development Toolchain, an easy-to-use toolchain for algorithm developers to develop software based on our SoCs,

which was one of the first self-developed automotive-grade development toolchains in China.

Extensibility

Supporting Tensorflow, Pytorch, and ONNX frameworks

High precision

Supporting post-training quantization of algorithm models, as well as quantization-aware training (QAT) to ensure the precision of algorithm models

Completeness

Equipped with NN models in its Model Zoo and deep learning models, which provides instructions to customers for easily and rapidly developing and deploying algorithms

A complete toolchain SDK and application support help customers deploy optimized models with ease

Flexibility

Flexible deployment with docker images

Black Sesame acceleration Run Time

Model Compiler uses the industry-standard MLlR framework, supportingthe conversion of models from ONNX, PyTorch, TensorFlow, etc.

Native compatibility with PyTorch’s inference APl, supporting Pythonprogramming and deployment.

Supports custom operator programming in Python with Triton,automatically compiling to hardware-accelerated code.